Introduction

Digital Humanities (DH) is a multifaceted field that combines two distinct, but interrelated forms of inquiry. The first entails the application of digital methods to traditional humanities questions, allowing scholars to explore historical texts, artifacts, and cultural phenomena through computational approaches. The second equally important facet of Digital Humanities involves the application of humanistic inquiries to digital technologies, emphasizing a critical understanding of the ethical, historical, and political dimensions of the computational process and methods. However, the true essence of Digital Humanities lies in its synthesis of these two impulses, surpassing mere computational analyses to engage with digital tools through self-reflection and a profound consideration of their impact on research, culture and interpretation.

In this context, Digital Humanities stands as more than a simple convergence of computational techniques and digitized materials. It embodies a mindset invested in the thoughtful use of digital tools, preserving the integrity of data while recognizing the complexities of interpreting and representing information. By embracing this reflexive approach, Digital Humanities differentiates itself from other computational fields like natural language processing, where the hermeneutic relationship between methods and the subject of study might be overlooked.

What makes Digital Humanities particularly exciting is its ability to employ computational techniques to unveil fresh perspectives on texts and collections of images. For researchers accustomed to close reading and qualitative analysis, the incorporation of digital tools offers an opportunity to explore larger corpuses and make claims across multiple texts, broadening the scale and scope of inquiry. Key concepts from influential works, such as “Humanities Approaches to Graphical Display” by Joanna Drucker, “Distant Reading” by Franco Moretti, and “Against Cleaning” by Katie Rawson and Trevor Munoz, have inspired me to challenge traditional assumptions and engage in a more diverse range of analytical methods.

Joanna Drucker’s work inspired me to think beyond the usual assumptions and norms of data visualization. Her work opened up possibilities to be reflexive about the process of data interpretation and the construction of objectivity through data visualization.

Similarly, Franco Moretti’s idea of ‘distant reading’ has had a large influence on my approach to research. As someone first trained in qualitative methods and discourse analysis, I had previously given more weight to close reading. The concept of distant reading initiated a shift in my approach to research and methodology because it made me aware of the utility abstracting away from particularities and minute structures in order to reveal patterns at larger scales. Though I have not stopped practicing close reading, I find the movement between fine-grained and overarching scales of analysis to be both generative and essential for challenging my own assumptions. Lastly, Katie Rawson and Trevor Munoz piece “Against Cleaning” has influenced how I approach creating datasets. Their work calls for paying greater attention to the small differences in texts that might get stripped out when cleaning data. They do not suggest to avoid processing data, but rather to create indexes and controlled vocabularies that preserve both the original text and the more abstract standardized text derived from it. By documenting the process of transforming data from “messy” to “clean,” we can be precise about how we interpret discourse and how we form judgments about the meaning, significance, or importance of information.

The visions for the future of Digital Humanities are influenced by various emerging trends in the field. Among these, the the promises and perils of artificial intelligence (AI) is undeniable. Recent developments in Large Language Models (LLMs) have resulted in a paradigm shift in the capabilities of computational natural language processing. Not only do LLMs out perform traditional NLP methods in some prediction and text generation tasks, LLMs can be instructed to reason “step-by-step” about classifications and predictions, thereby opening up natural language processing to more reflexive insights about the process of processing text. Additionally, the confluence of post-colonial theory and critical race theory with Digital Humanities raises questions come to a fore in several core concerns of digital humanities. Scholarship must continue investigate the complex relation between colonial legacies, imperialalism, globalization, deglobalization and categorization, memory, repatriation, and cultural communication. Moreover, the development of mapping technologies in GIS presents both opportunities and challenges for DH scholars. While new products and services reduce the barriers and complexities involved in mapping, the growth of geodata demands a more humanistic approach to spatial representations. Future digital humanities scholars will have to contend with experiences of space and place that cannot be captured by the mapping techniques built into current software packages and services.

In conclusion, Digital Humanities epitomizes a dynamic and interdisciplinary domain that marries digital methodologies with inquiries into cultural, history and ethics. As a synthesis of computational and critical practices, it promotes a reflexive approach to digital tools, fostering nuanced interpretations and meaningful insights into the complexities of historical and cultural phenomena. By embracing emerging trends and diverse perspectives, the future of Digital Humanities promises to be a vibrant and transformative force, enriching research endeavors and encouraging fresh engagements with digital scholarship.

Vision Statement

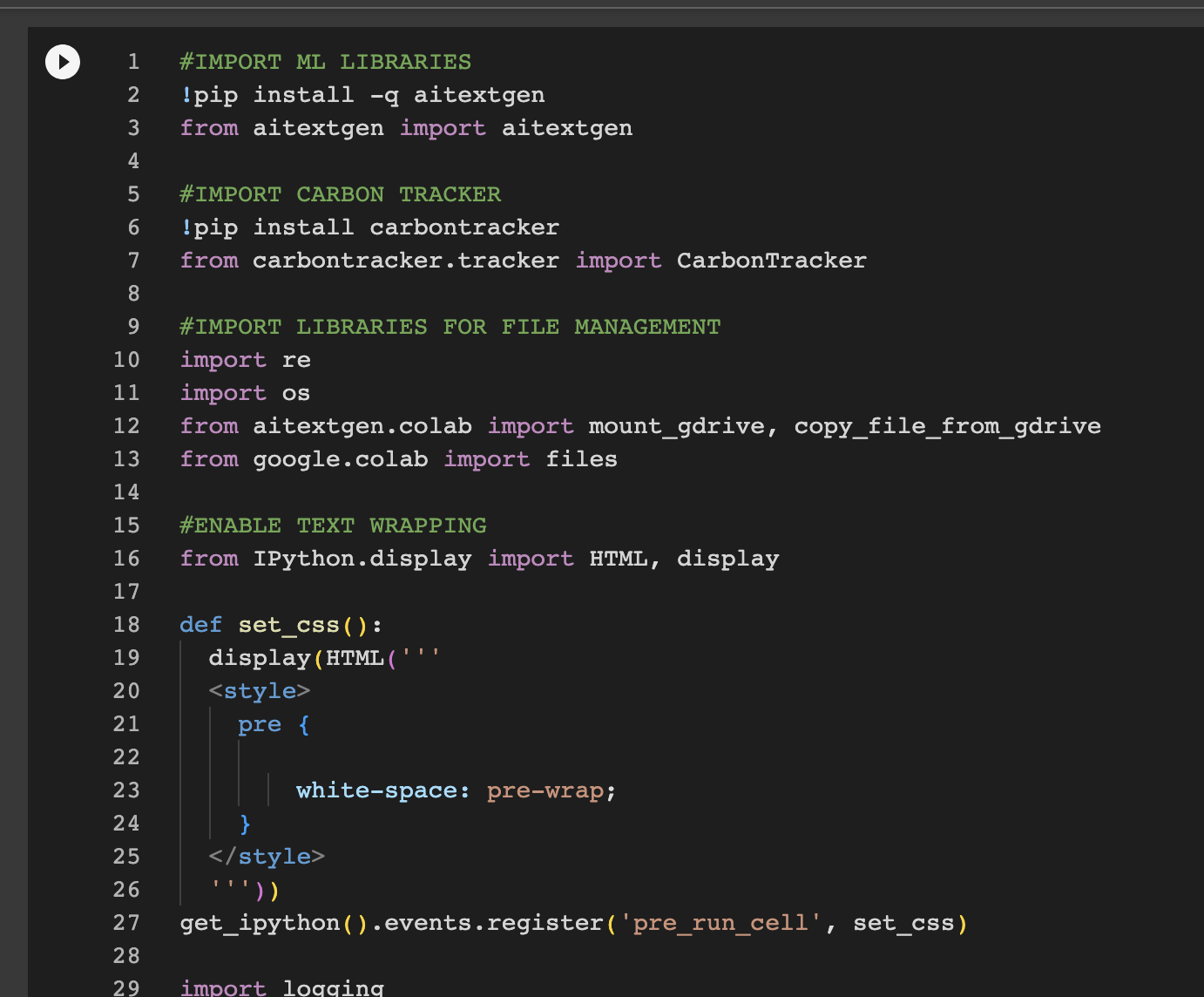

My contributions to Digital Humanities have centered on developing methods for combining natural language processing techniques with GIS in order to foster a critical dialogue between digital humanities, information studies and geography. My efforts focus on exploring complex patterns within textual artifacts using named entity recognition, data visualization, machine learning, GPT-based classification, and historic map georectification. In each of projects listed below I endeavor to combine computational techniques for processing historical materials with methods for visualization and mapping. Though some of these projects were speculative and experimental, they helped me develop skills and techniques which I can use in my future research.

In addition to using DH techniques for research, have developed hands on workshops that aim to share techniques and theoretical insights I have gained through working on digital humanities projects with members of the broader public. As an educator, I strive to inspire others through hands-on workshops and training sessions that foster a culture of critical inquiry and technical fluency. In workshops such as “Introduction to Text Generation” I introduced students to various techniques for text generation as well as some of the ethical concerns regarding biases. By learning how to use these models, students gained newfound appreciation for how Artificial Intelligence is built, and how sensitive language models are to training data.

Selected Digital Humanties Projects

-

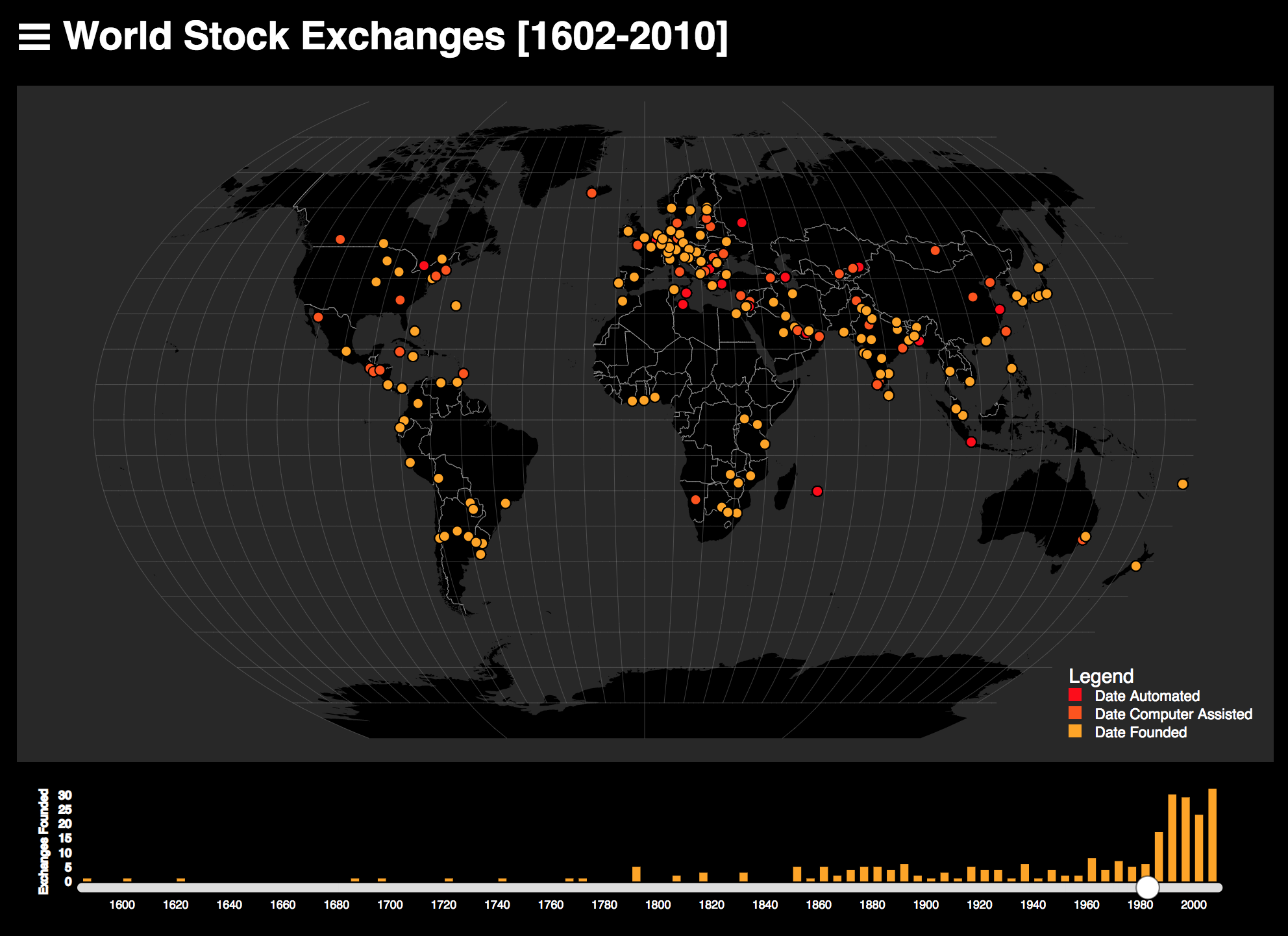

World Stock Exchanges

For Introduction to Digital Methods & Scholarship (INF STD 289) I developed a programmatic method to structure unstructured historical data regarding stock exchanges. Using Natural Language Processing (NLP) I extracted relevant dates and geographic locations. This data was then geocoded such that the locations and dates of historic stock exchanges could be mapped. My collaborator Laura Jara helped develop a controlled vocabulary to classify stock exchanges and, using a mix of programmatic and manual methods we annotated the data to identify shifts from face-to-face markets toward computerized exchanges.

-

![NASDAQ IN THE NEWS [1969-1989]](https://www.automatedfutures.net/wp-content/uploads/2023/07/screenshot.jpg)

NASDAQ IN THE NEWS [1969-1989]

For Introduction to Digital Humanities I used a combination of natural language processing, network analysis and mapping to tag historic news articles regarding the NASDAQ stock exchange and to map networks of associations in concordances of text.

-

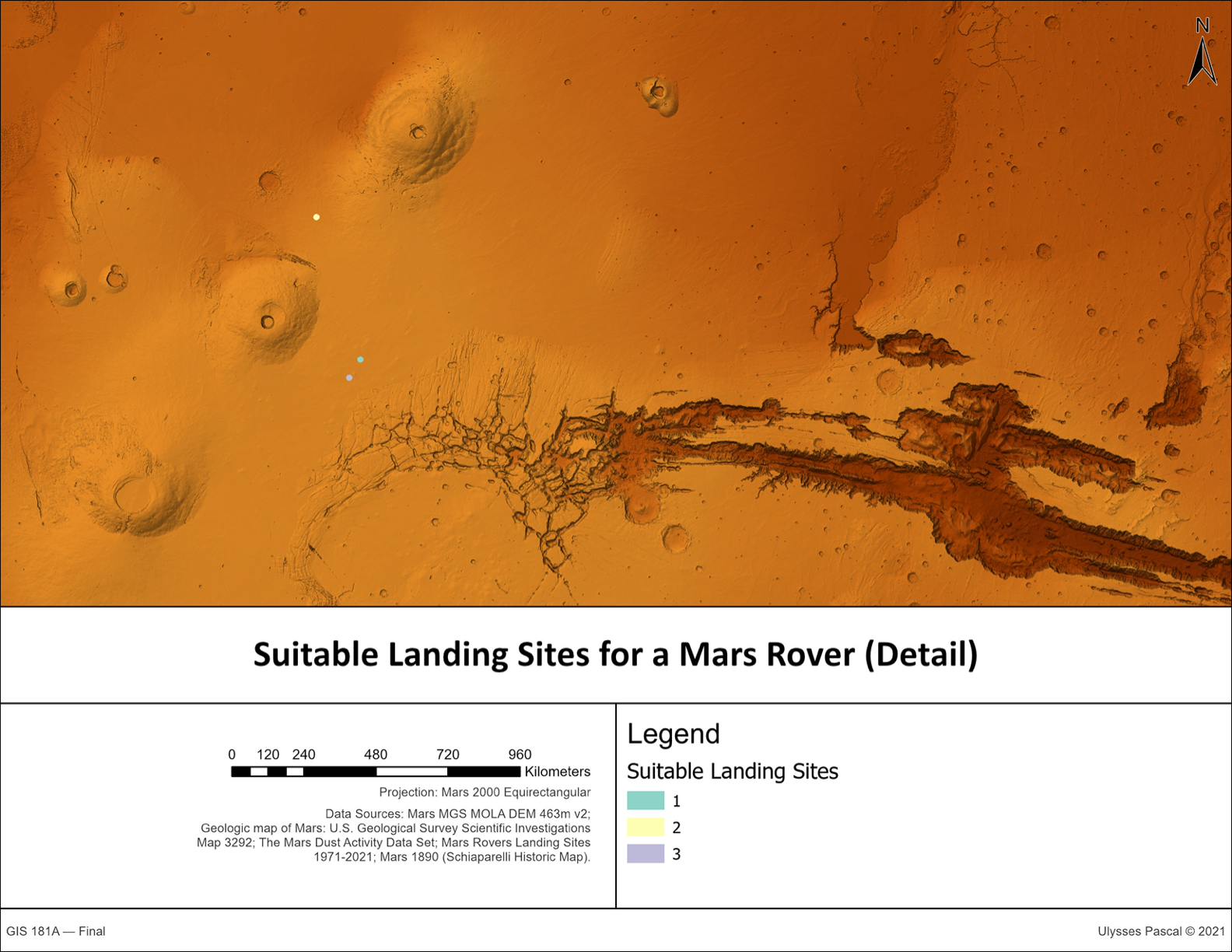

Revisiting Schiaparelli’s Martian Canale

For Geography 181A (Intermediate GIS) I conducted an analysis of Giovanni Schiaparelli’s historic maps of Martian Canale. In this speculative project, Georectified Schiaparelli’s historic maps and combined them with contemporary remote sensing data regarding conditions on the Martian surface to identify suitable landing sites to study Schiaparelli’s Canale.

-

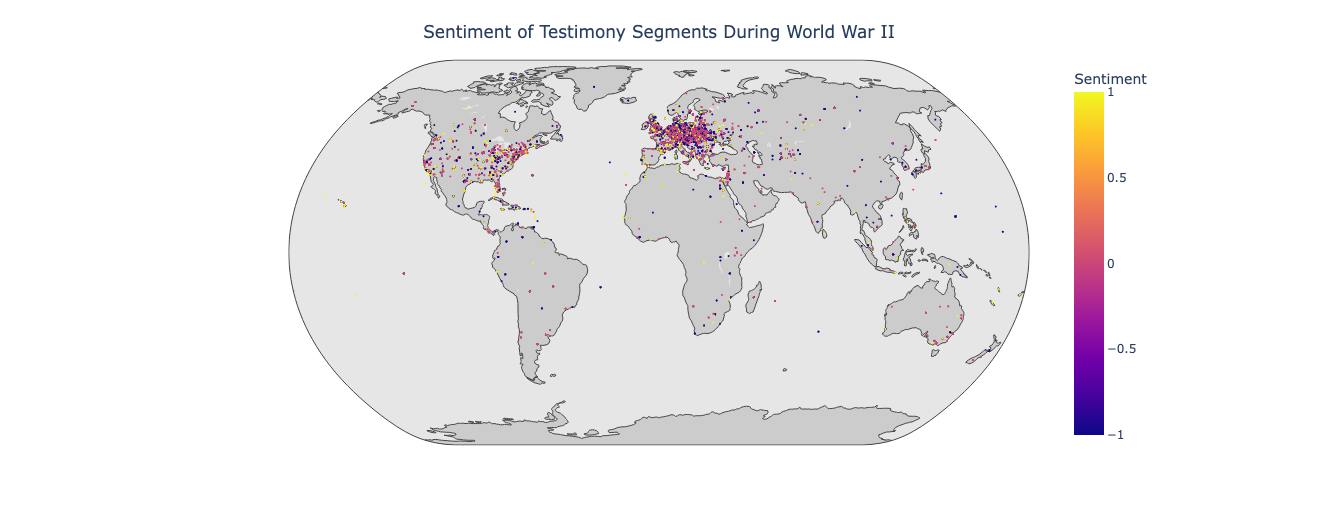

Geographies of Affect–Place and Sentiment in Holocaust Testimonies

Contemporary sentiment analysis tools lack the level of nuance and dedication to detailed description that Boder exhibited. Sentiment analysis tools are limited in multiple dimensions, including inabilities or ineffective accounts for tone, sarcasm, negations, comparatives, and multilingual data, and these weaknesses are enhanced especially when applied to complex texts in digital humanities. For this capstone project we tested different implementations of sentiment analysis to compare their affordances and limitations. I compared GPT-3 with GPT-2 and GPT-2 Fine tuned. I then mapped the results to visualize the geographic distribution of sentiment before during and after World War II.

Digital Humanities Pedagogy

Digital Humanities Portfolio

© Ulysses Pascal