Developing a NLP-driven research method

I am at the early stages of developing a new research strategy for analyzing historical materials. Given the enormous volume of digitized but otherwise unstructured textual data stored in online databases such as Factiva it is easy to feel overwhelmed when beginning to research a new topic. I envision a method for digesting these texts. Using named entity recognition I want to parse the text into a structured dataset of entities and their concordances. Likely, the system would have two passes. First, using named entity recognition, the system would extract relevant entities from the text and store them in a list. Second, the system would use the list to produce a dataset with each name and its concordance in the text. At the outset, it seems like the minimal aspects of the data that should be extracted for the purpose of historical research are: persons, organizations, locations, times, sources. Ideally, this data could be used to visualize networks of association or geographical transformations over time. Clearly the difficulty of the project is how to formalize relevant notions of ‘association’ and how to handle homonyms or heteronyms. Because of the nature of news articles, it is unclear what the relevance of co-occurrence in one document is. For example, being interviewed by a journalist for the same story means that two interviewees have some sort of relation to one another. Nevertheless, it is impossible to infer from this fact alone what the content of that relation is. A clearer notion of association may be accessible through the collection of multiple instances of occurrence. If two entities are often mentioned in news articles, perhaps there is an important relationship between them. I doubt that an algorithmic procedure can elucidate what the relationship is without human interpretation. However, I do believe that an algorithm can help direct a researcher’s attention to important relationships. Of course, the utility of such a procedure is threatened by the fact that many different entities share the same name, and sometimes one entity can change its name over time. These simple facts of history tend to escape the purview of computational approaches to the text. The goal though is not to use methods of digital humanities to derive research conclusions, but rather as a tool to gain entry into a new and nebulous topic

Python for finance

I bought “Python for Finance” by O’Reilly Press from Amazon the other day. Today I am getting started with the book, trying follow along with as many of code snippets as possible.

In the beginning chapters of the book, I am struck by how difficult it is to get the basic environment ready for coding. Before you learn how to do 1+4, you have to set up a containerized cloud instance protected by an SSH key. In the past, I’ve just run python on my computer, but this book requires setting up your Python environment in a containerized app on the cloud. A few thing struck me about the process.

First, there is the whole notion of containerization. Has anybody written about containerships and containerized apps? The cloud infrastructure seems to be an attempt to create modularity within software development. The idea behind containerized apps is that the you can create a self-contained application that is not dependent on the file structure of any particular machine, and thus can be deployed on any machine. In finance, I assume that the rationale behind containerization is two fold, first as a way to leverage the low costs of cloud infrastructure, and second as a way to take advantage location. By making financial programs independent from local file structures, it is possible to test and develop containerized apps on cheaper server locations while deploying them as close as possible to the exchange on expensive digital real-estate.

Second, security is stressed in setting up the coding environment. Unlike other books in programing with Python, this book requires encrypting the coding environment with SSH key pairs. Because placing programs on servers means that the data may be exposed to hackers, following security measures to hide the data from others is an essential practice.

It is interesting that these sophisticated command line methods are presented before the most basic principles of programming in Python. Only after setting up a secure containerized instance does the author introduce the reader to simple Python operations like addition, subtraction, and multiplication.

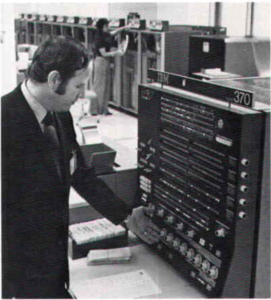

Securities Industry Automation Corporation’s Data Processing Equipment

The New York Stock Exchange formed the The Securities Industry Automation Corporation (SIAC) to automated order routing in 1972. The NYSE boasted in its 1973 Annual Report that, “SIAC data processing equipment, including 10 major computer systems, makes it one of the largest data processing service operations in the United States in terms of size.”