Advanced Googling It

I this online workshop I introduced artists, academics and activist to tools for finding digital resources and scraping data for research purposes.Read More

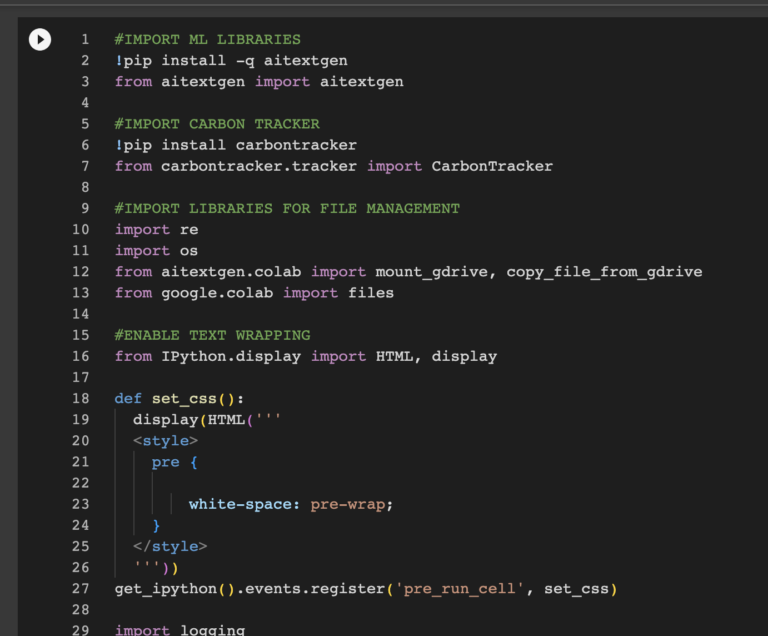

Introduction to Text Generation with Large Language Models

In this short course on text generation with large language models I introduce students to the basic concepts surrounding transformer models and how they differ from previous approaches to language generation. I also introduce students to concerns surrounding training data, biases, and the energy costs of training large models. In the hands on component of the workshop, I guide students through a python notebook that introduces them to concepts such as prompt engineering, fine turning and methods for studying biases in text generation output. The notebook is structured in a way as to not require knowledge of python to complete, while still giving students a deeper understanding of how text generation models work. Read More

Aesthetics and Politics of Algorithms

In this two month online series for the independent LA arts organization Navel, I collaborated with the digital media artist Blaine O’Neill to host online discussions with artists and researchers who use or analyze AI in their work.Read More